Publications

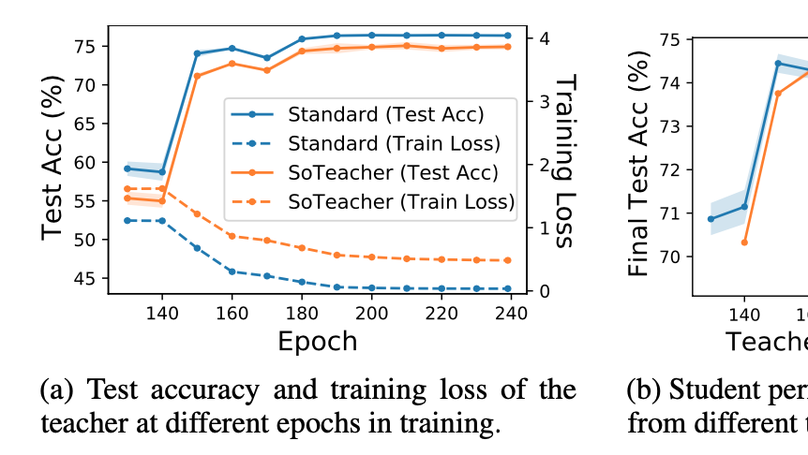

SoTeacher: Toward Student-Oriented Teacher Network Training For Knowledge Distillation

How to train an ideal teacher for knowledge distillation? We call attention to the discrepancy between the current teacher training practice and an ideal teacher training objective dedicated to student learning, and study the theoretical and practical feasibility of student-oriented teacher training.

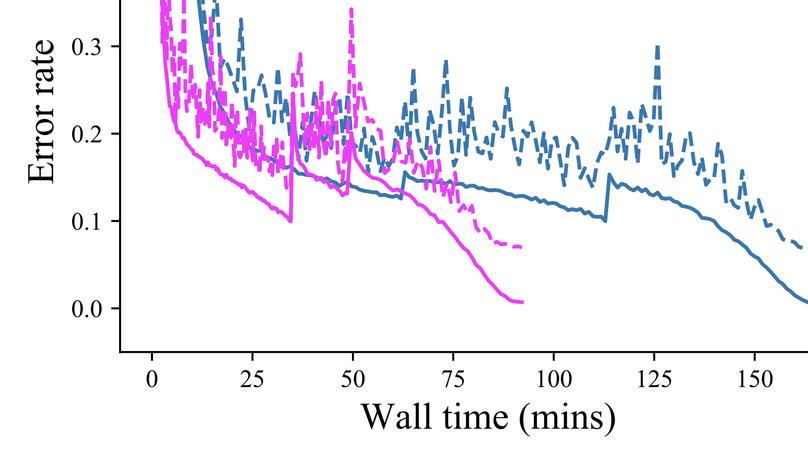

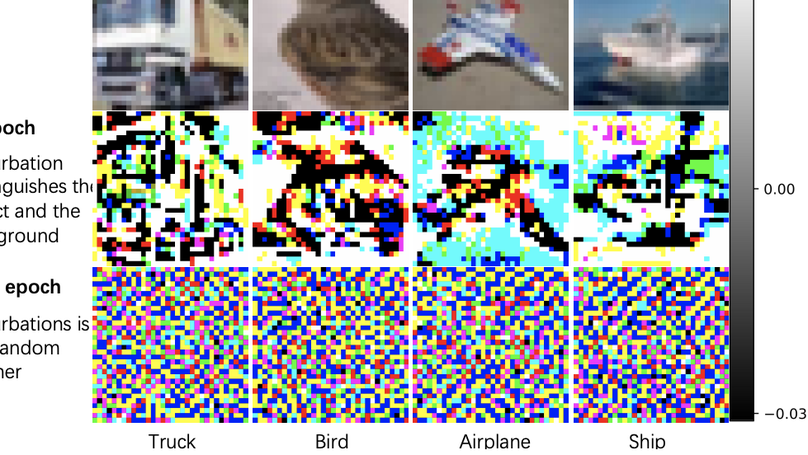

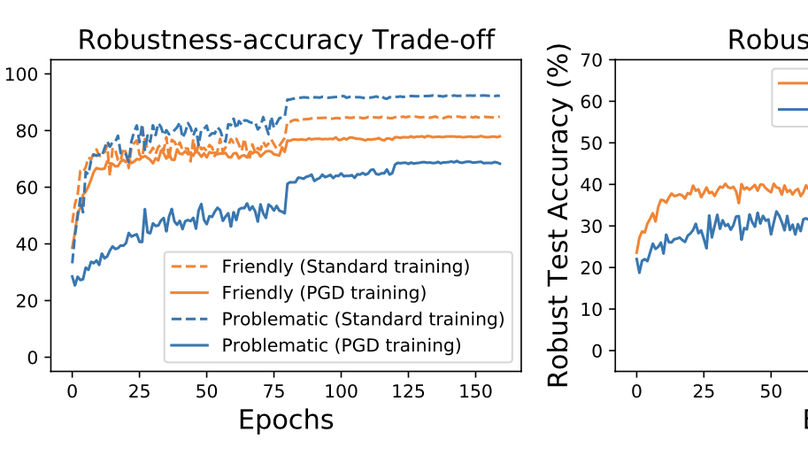

Label Noise in Adversarial Training: A Novel Perspective to Study Robust Overfitting

We found that label noise implicitly exists in adversarial training and can explain the intriguing and problematic robust overfitting phenomenon. Robust overfitting is in fact an early part of an epoch-wise double descent.

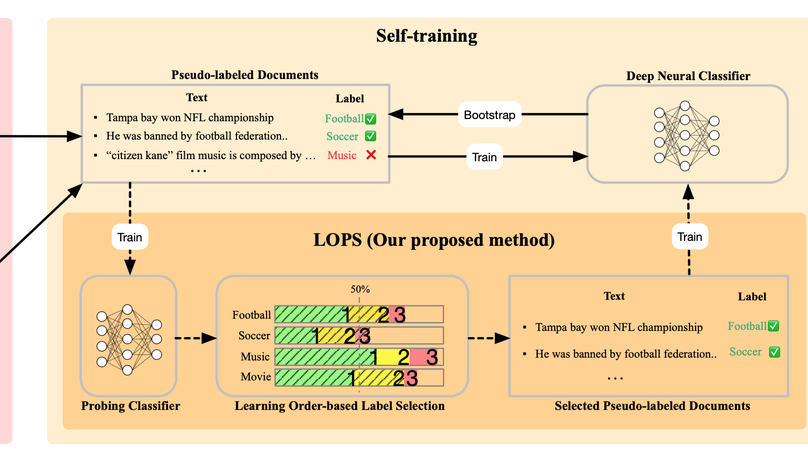

LOPS: Learning Order Inspired Pseudo-Label Selection for Weakly Supervised Text Classification

We leverage the persistent and consistent order of deep neural networks in learning data examples to identify high-quality pseudo-labels for text classification. Those pseudo-labels are obtained cheaply based on keyword match.

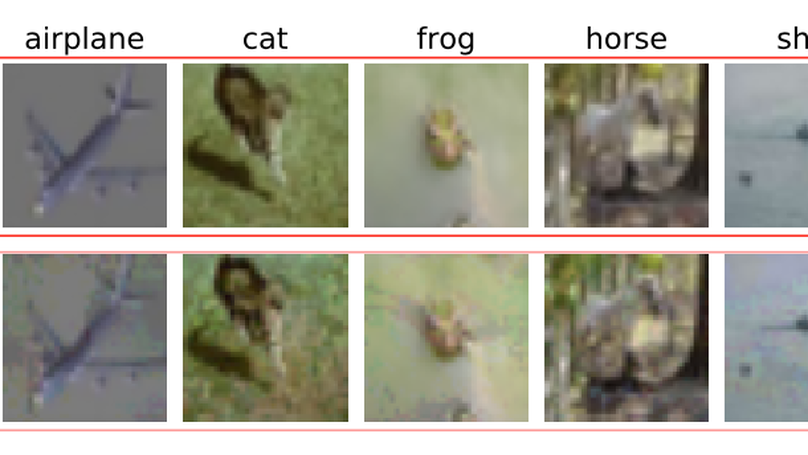

Data Quality Matters For Adversarial Training: An Empirical Study

We show that those well-known problems in adversarial training, including robust overfitting, robustness overestimation, and robustness-accuracy trade-off, are all related to low-quality samples in the dataset. Removing those low-quality samples can greatly alleviate these problems and often boost the robustness as well.

BFClass: A Backdoor-free Text Classification Framework

Backdoor attack introduces artificial vulnerabilities into the model by poisoning a subset of the training data via injecting triggers and modifying labels. Various trigger design strategies have been explored to attack text classifiers, however, defending such attacks remains an open problem. In this work, we propose BFClass, a novel efficient backdoor-free training framework for text classification. The backbone of BFClass is a pre-trained discriminator that predicts whether each token in the corrupted input was replaced by a masked language model. To identify triggers, we utilize this discriminator to locate the most suspicious token from each training sample and then distill a concise set by considering their association strengths with particular labels. To recognize the poisoned subset, we examine the training samples with these identified triggers as the most suspicious token, and check if removing the trigger will change the poisoned model’s prediction. Extensive experiments demonstrate that BFClass can identify all the triggers, remove 95% poisoned training samples with very limited false alarms, and achieve almost the same performance as the models trained on the benign training data.

Average Approximates First Principal Component? An Empirical Analysis on Representations from Neural Language Models

The average of contextualized representations shares almost the same direction as the first principal component of the matrix whose columns are these representations. We believe this explains why the average representation is always a simple yet strong baseline.